While the focus of Windows 7 was to fix Windows Vista, the focus of Windows 8 was to make Windows scalable to the point where it could run on tablets and phones. This had always been one of the blind spots for Microsoft developers – we all had beefy development and test machines that were always plugged into the wall with fans to cool them. Everybody was used to Moore’s Law which proved that things always get better, so reducing overhead was rarely a high priority.

When thinking of mobile devices (including laptops), most people focus on battery life (which is certainly important), but we also have to deal with thermal dissipation. Electronics crammed into very small spaces tend to get hot and they do not have fans to cool them down. Hence, the hardware itself usually can detect when it is too hot, and then it throttles itself to run slower (so it can cool down).

So while the hardware may be technically capable of handling certain loads, the actual loads that it can handle at any given time varies depending on how hot it is. People mistakenly think that reducing power is not important if the device is plugged in/charging, but charging the device increases its temperature. Hence, saving power for mobile devices is important regardless of whether it is plugged in or not. This was not intuitive to many people.

At this point I was moved to the scheduler team under Steve Pronovost, who is a better person than me in almost every way and is currently a Distinguished Engineer at Microsoft. I learned a lot working for him. Many issues needed addressing to lower Window’s power consumption on mobile devices, but I had two main areas of focus – both of which were coupled to the Desktop Windows Manager (DWM).

The rest of this post attempts to describe the problems that I was attempting to solve, and becomes a little technical. Feel free to skip this if you are not interested in the details.

Area of focus #1: Enabling the use of hardware overlays.

One trick that mobile devices use to save power is to utilize smaller, fixed-function hardware cores. These cores include “hardware overlay” technologies, which I was an expert on due to my time working at Cirrus Logic. The display hardware in most every phone has the ability to scan different parts of memory to be displayed, and then blend them into a single image. They rely heavily on this to save power.

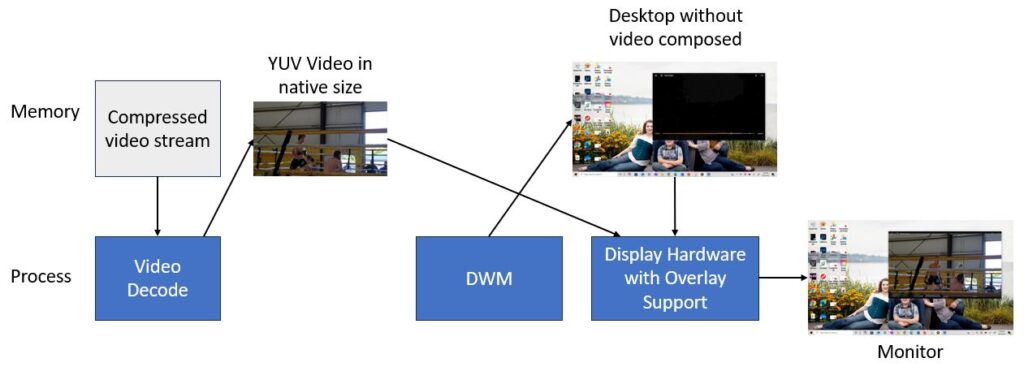

As an example, here is the typical video pipeline without hardware overlays:

And here is the pipeline utilizing hardware overlays:

In the top diagram, the main GPU core is generally used for video processing and for DWM composition, and for every raw frame of uncompressed video, at least two extra rendering passes are needed before it is displayed.

In the second diagram, the overlay hardware can do the colorspace conversion, video resizing, and blending without utilizing the main GPU core (so it can go to sleep if it is not being used for anything else). The second diagram also requires less CPU utilization.

When used carefully, overlay hardware can significantly save power and help performance, but it can cause issues when not used carefully.

Area of focus #2: Reduce the DWM overhead.

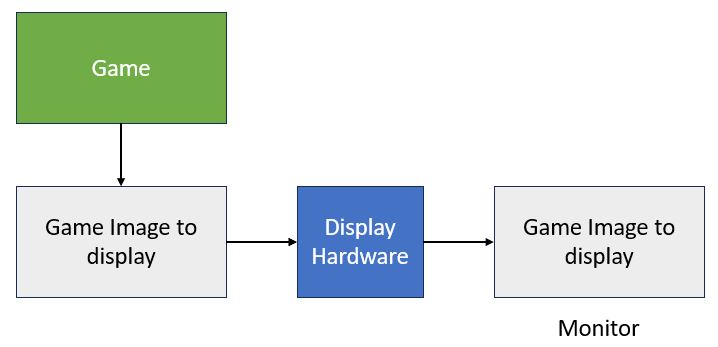

Before the Windows Vista (when the DWM was first introduced), the game would typically allocate two buffers that it would use to display the final image. They would render to one buffer while the other was being displayed, and then would “present” the image, at which point we’d tell the display hardware to start displayed the newly rendered buffer at the start of the next monitor refresh cycle (called a VSYNC).

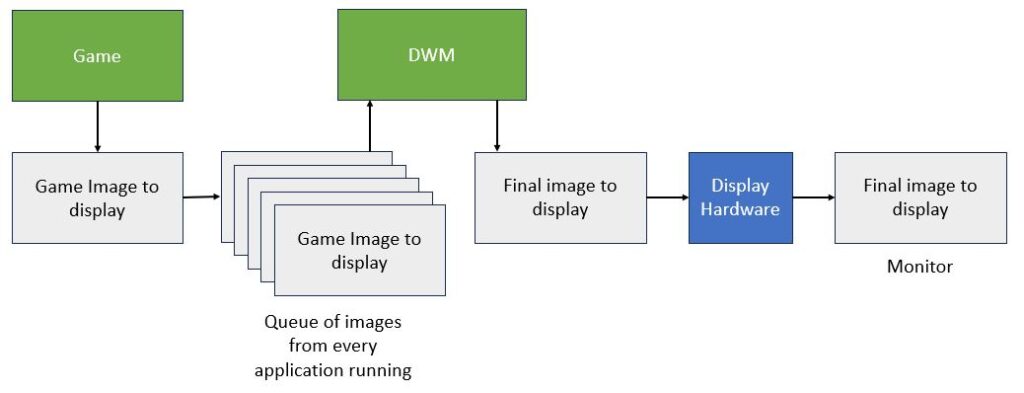

With the DWM, however, the game did not actually perform this flip, but rather we give the data to the DWM to present. This was how it worked:

While this enables cool blended windows frames and scenarios like this:

It also has forces extra overhead outside of such scenarios:

- The DWM must always perform an extra composition pass, which takes GPU and CPU time away from the game and uses extra power.

- It incurs a latency of one VSYNC (if the refresh rate is 60hz, this equates to 16ms).

For most desktop systems (and even most laptops), this overhead was deemed acceptable, but for ARM processors designed to run on Android systems that don’t have a compositor, this overhead was often not acceptable.

During Windows 8 and Windows 8.1, most of my focus was implementing three features: DirectFlip, Multiplane Overlay Support (MPO), and IndependentFlip.