After we shipped Windows XP in late 2001, it became apparent that we needed to redesign the operating system architecture that supported the GPU. Before I get into this, I need to explain how things worked at that time (and why they needed fixing). This will be a little more technical than my previous posts.

GPUs are different than CPUs in many ways (especially the GPUs of 2003). GPU architectures have changed a lot since those days and even then, their architectures differed across vendors, so what I’m explaining here is a high-level generalization:

- CPUs are a multipurpose processor that focuses on a single, simple task at a time. GPUs are usually a collection of smaller processors with very specialized hardware functions. For example, a GPU might consist of:

- A main GPU core that processes 3D operations.

- A video decoder core that accelerates some specific video decoding operations.

- A core whose job it is to copy data around.

- CPUs can access regular memory with few restrictions.

- CPUs contain page tables to virtualize memory and to make memory appear contiguous to the CPU.

- GPUs (especially discreet GPUs) usually have their own dedicated memory and objects in that memory must be physically contiguous. Often the different cores had different memory interfaces, so you must know how the GPU memory will be used at the time that it is allocated.

- CPUs are preemptible and were built for virtualization (starting with the i386).

- CPUs provide the illusion that many programs are running simultaneously on your computer, when in fact, your CPU takes turns running each program for a short period of time. This works because it can easily switch between programs very quickly. This is because the CPU has very little internal state, and what state it has can be quickly saved and restored.

- GPUs, on the other hand, were not preemptable and were not built for virtualization. The game will typically setup a lot of data that includes command buffers, geometry, shaders, and textures/images and then you tell the hardware to go, at which point it does a gazillion operations in parallel to generate the 3D image. While the GPU is busy generating the resulting image, it cannot be interrupted or used for anything else until it finishes rendering the image.

- Each game used as much GPU memory as it could – a common pattern was for games to query the size of video memory and then assume that it could use all of it. Hence, it was unlikely that two games can efficiently utilize a GPU simultaneously.

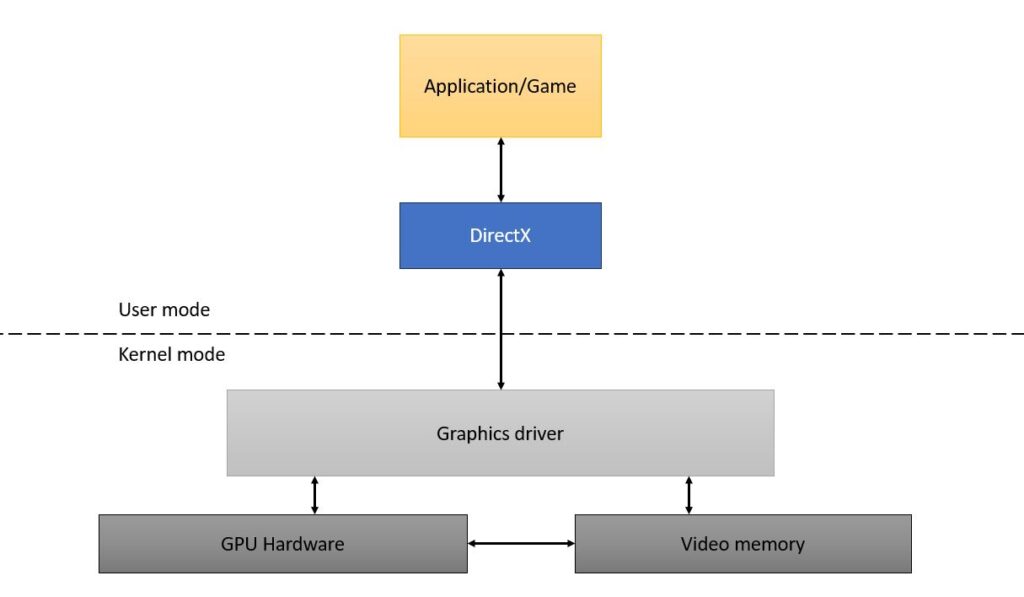

The operating system design for GPU support was also pretty simple:

- There were two primary APIs that applications could call to operate the GPU: DirectX and OpenGL.

- The applications/programs run in user mode, which means that each application has its own memory access and is sufficiently separated from other applications that if one crashes, it only impacts the application that crashes (the remaining applications are fine). These applications call into the D3D or OpenGL APIs, which in turn call into the graphics driver.

- The graphics drivers lived entirely in kernel mode, where everything shares a single memory space. Whatever happens in kernel mode impacts the entire system. If a kernel mode driver crashes, the entire computer crashes.

The resulting architecture looked like this:

The XDDM driver model worked at the time because GPUs were primarily used by games and normally people did not play two games simultaneously. But things started to change around 2003:

- Some important people within Microsoft didn’t like that fullscreen gaming mode meant that you didn’t see every pixel that the operating system wanted you to see. If the operating system wanted to display a message, they wanted you to see the message regardless of whether you were playing a game or not.

- Other uses for 3D besides games and video were becoming popular.

- Windows wanted to create a 3D compositor that was used to display every pixel. This had huge implications – not the least of which was that it was now guaranteed that multiple applications would have to share the GPU.

- Many drivers within windows supported updating the driver without having to reboot the system, but the graphics drivers still required a reboot.

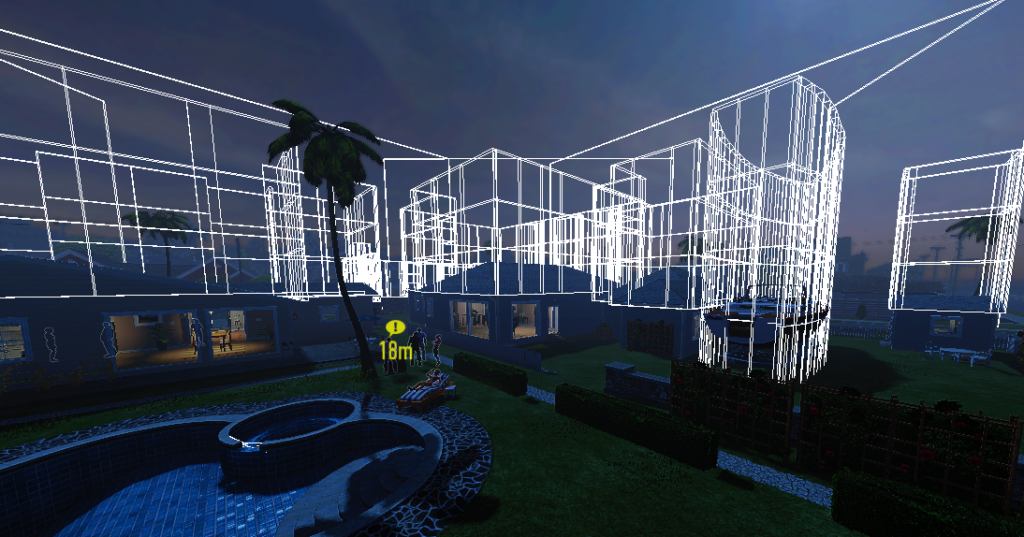

- Graphics drivers were always adding hacks and features to differentiate themselves from other vendors.

- For example, a driver might contain a cheat for a video game that allows you to see through walls. For this to work, the driver must recognize patterns of each game and then do one thing when the game told you to do another. This made the drivers increasingly complex, and with that complexity came an increase of bugs.

- Graphics drivers became the biggest cause of bugchecks/bluescreens across all of windows (by far), and we needed to reduce these crashes.

In summary, we had to redesign the operating system support for GPUs such that:

- Multiple programs can use the GPU simultaneously with reasonable performance.

- We had to remove a lot of the existing driver code from kernel mode and move it into user mode.

- We had to ensure that all existing GPU applications/games ran reasonably well on this new infrastructure.

- We had to allow the graphics driver to be updated without rebooting the system.

It ended up being a very fun and frustrating project.